반응형

※ 김성훈 교수님의 [모두를 위한 딥러닝] 강의 정리

- 참고자료 : Andrew Ng's ML class

1) https://class.coursera.org/ml-003/lecture

2) http://holehouse.org/mlclass/ (note)

1. Hypothesis using matrix

- H(x1, x2, x3) = x1w1 + x2w2 + x3w3

-> H(X) = XW

2. tensorflow 구현

2-1. 기존 방법

| import tensorflow as tf |

| tf.set_random_seed(777) # for reproducibility |

| x1_data = [73., 93., 89., 96., 73.] |

| x2_data = [80., 88., 91., 98., 66.] |

| x3_data = [75., 93., 90., 100., 70.] |

| y_data = [152., 185., 180., 196., 142.] |

| # placeholders for a tensor that will be always fed. |

| x1 = tf.placeholder(tf.float32) |

| x2 = tf.placeholder(tf.float32) |

| x3 = tf.placeholder(tf.float32) |

| Y = tf.placeholder(tf.float32) |

| w1 = tf.Variable(tf.random_normal([1]), name='weight1') |

| w2 = tf.Variable(tf.random_normal([1]), name='weight2') |

| w3 = tf.Variable(tf.random_normal([1]), name='weight3') |

| b = tf.Variable(tf.random_normal([1]), name='bias') |

| hypothesis = x1 * w1 + x2 * w2 + x3 * w3 + b |

| # cost/loss function |

| cost = tf.reduce_mean(tf.square(hypothesis - Y)) |

| # Minimize. Need a very small learning rate for this data set |

| optimizer = tf.train.GradientDescentOptimizer(learning_rate=1e-5) |

| train = optimizer.minimize(cost) |

| # Launch the graph in a session. |

| sess = tf.Session() |

| # Initializes global variables in the graph. |

| sess.run(tf.global_variables_initializer()) |

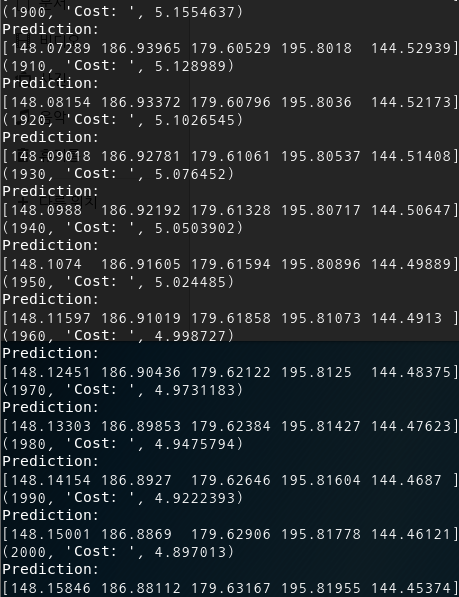

| for step in range(2001): |

| cost_val, hy_val, _ = sess.run([cost, hypothesis, train], |

| feed_dict={x1: x1_data, x2: x2_data, x3: x3_data, Y: y_data}) |

| if step % 10 == 0: |

| print(step, "Cost: ", cost_val, "\nPrediction:\n", hy_val) |

2-2. matrix 이용

| import tensorflow as tf |

| tf.set_random_seed(777) # for reproducibility |

| x_data = [[73., 80., 75.], |

| [93., 88., 93.], |

| [89., 91., 90.], |

| [96., 98., 100.], |

| [73., 66., 70.]] |

| y_data = [[152.], |

| [185.], |

| [180.], |

| [196.], |

| [142.]] |

| # placeholders for a tensor that will be always fed. |

| X = tf.placeholder(tf.float32, shape=[None, 3]) |

| Y = tf.placeholder(tf.float32, shape=[None, 1]) |

| W = tf.Variable(tf.random_normal([3, 1]), name='weight') |

| b = tf.Variable(tf.random_normal([1]), name='bias') |

| # Hypothesis |

| hypothesis = tf.matmul(X, W) + b |

| # Simplified cost/loss function |

| cost = tf.reduce_mean(tf.square(hypothesis - Y)) |

| # Minimize |

| optimizer = tf.train.GradientDescentOptimizer(learning_rate=1e-5) |

| train = optimizer.minimize(cost) |

| # Launch the graph in a session. |

| sess = tf.Session() |

| # Initializes global variables in the graph. |

| sess.run(tf.global_variables_initializer()) |

| for step in range(2001): |

| cost_val, hy_val, _ = sess.run( |

| [cost, hypothesis, train], feed_dict={X: x_data, Y: y_data}) |

| if step % 10 == 0: |

| print(step, "Cost: ", cost_val, "\nPrediction:\n", hy_val) |

반응형

'Deep Learning' 카테고리의 다른 글

| [머신러닝/딥러닝] Logistic (regression) classification 구현하기 by TensorfFlow (0) | 2019.12.04 |

|---|---|

| [머신러닝/딥러닝] 파일에서 Tensorflow로 데이터 읽어오기 (0) | 2019.12.02 |

| [머신러닝/딥러닝] Linear Regression의 cost 최소화의 Tensorflow 구현 (0) | 2019.11.21 |

| [머신러닝/딥러닝] TensorFlow로 간단한 linear regression 구현 (0) | 2019.11.21 |

| [머신러닝/딥러닝] Tensorflow 설치 및 기본 동작원리 (0) | 2019.11.19 |